From Idea to a RAG-Ready SaaS: What Really Happens

#TL;DR

I tried to build a “simple” AI chat product.

I ended up building a production-grade application architecture.

This post documents what actually happens when you take a SaaS from local development to a real, deployable system — including the technical decisions, mistakes, and lessons learned along the way.

Source code:

https://github.com/sofman65/privia

Demo:

https://privia-frontend.vercel.app/

#Why I Built This

Most product tutorials stop at:

- a landing page

- basic authentication

- one chat endpoint

- demo-level UX

But real products force you to understand:

- boundaries between frontend and backend

- authentication models across HTTP and WebSocket

- persistence and data consistency

- deployment wiring and CORS

- operational trade-offs before any “AI magic”

I wanted a hands-on, honest project that I could explain end-to-end with technical credibility.

So I built Privia.

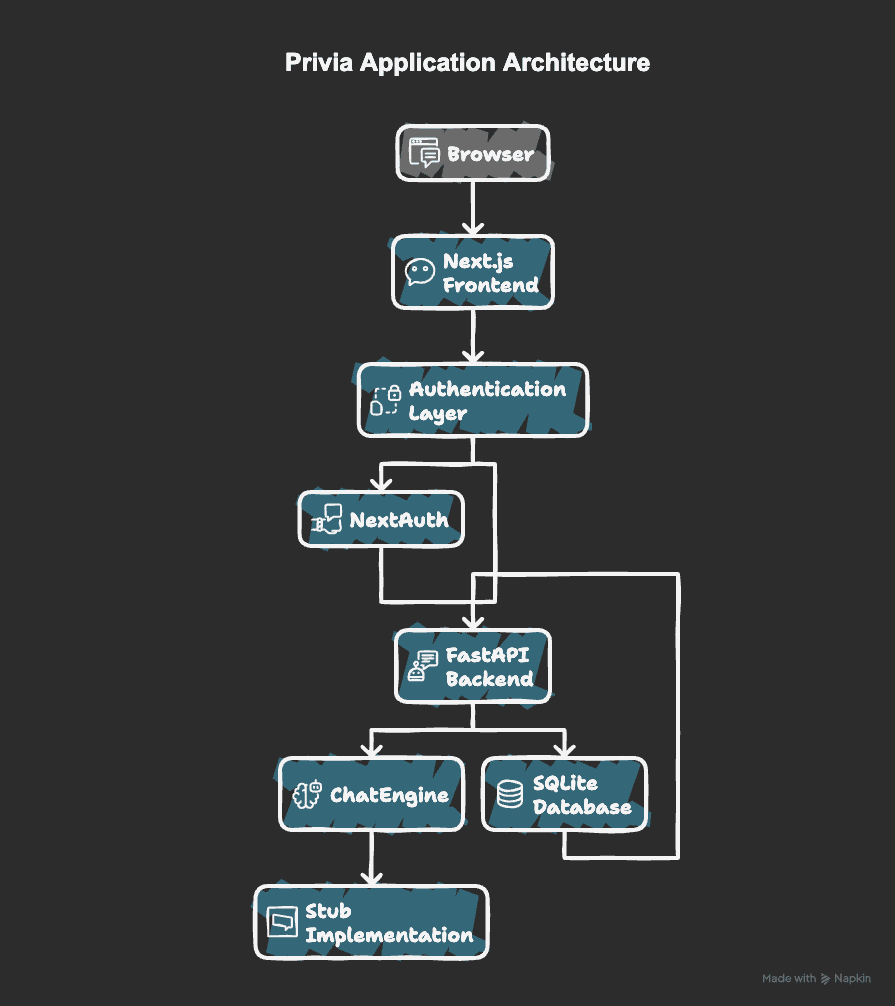

#The Goal

Build a product system that:

- Exposes a FastAPI backend API

- Provides a Next.js App Router frontend

- Supports signup/login plus OAuth

- Persists conversations and messages

- Streams responses in real time (SSE + WebSocket)

- Is fully containerized for deployment

- Is AI-ready (RAG-compatible architecture boundary)

No shortcuts.

No fake architecture diagrams.

The focus was never model quality — it was system correctness and product boundaries.

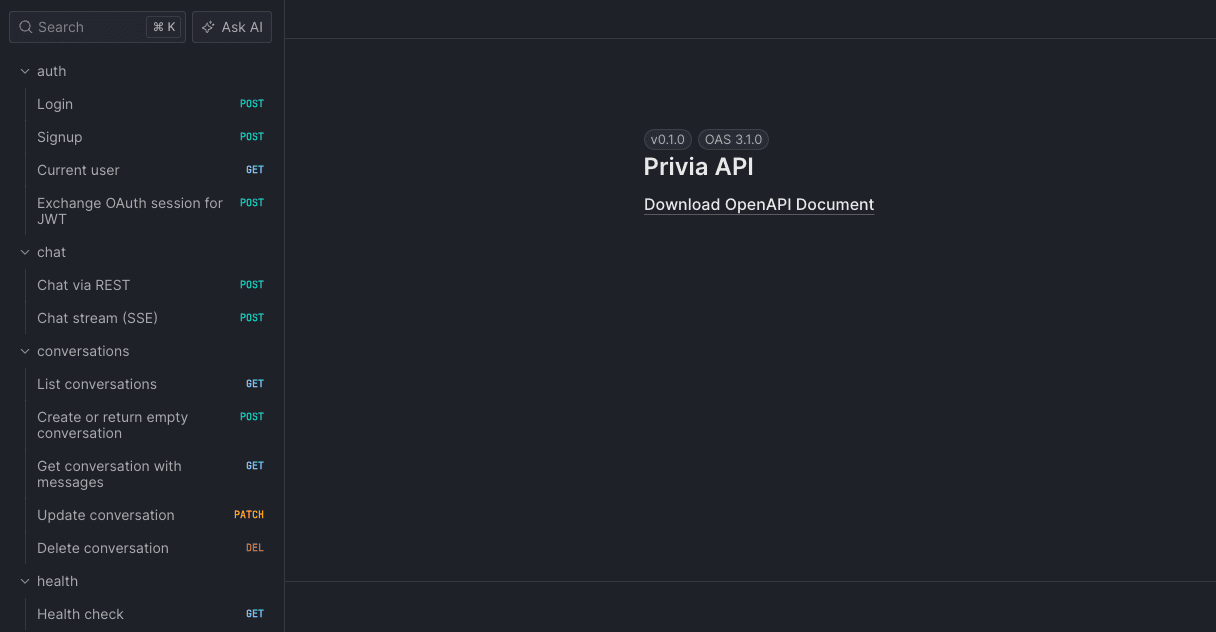

#Step 1 — FastAPI Backend

I started with a clean backend structure:

/api/health/api/auth/*/api/query/api/stream/api/ws/chat/api/conversations/*

With clear separation of:

- routes

- schemas

- models

- security

- engine abstraction

At this stage, everything worked perfectly locally.

That was the easy part.

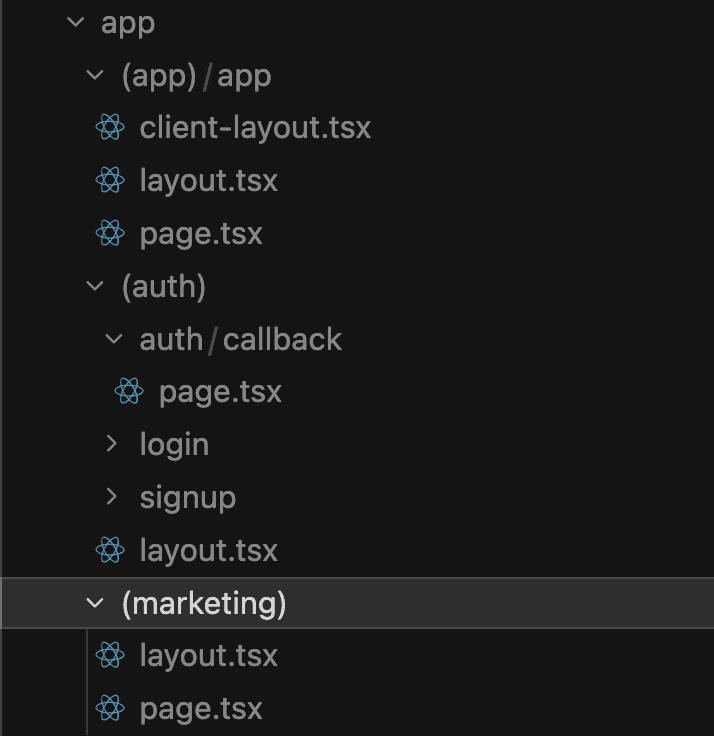

#Step 2 — Next.js Frontend (And the First Reality Check)

I built the frontend using route groups:

(marketing)(auth)(app)

The UI looked clean.

Navigation worked.

Auth pages worked.

Then real application behavior exposed problems:

- route guards must run before render

- token state must remain consistent across cookies and in-memory state

- protected routes must not flash unauthenticated UI

This forced me to treat frontend routing as access control, not just navigation.

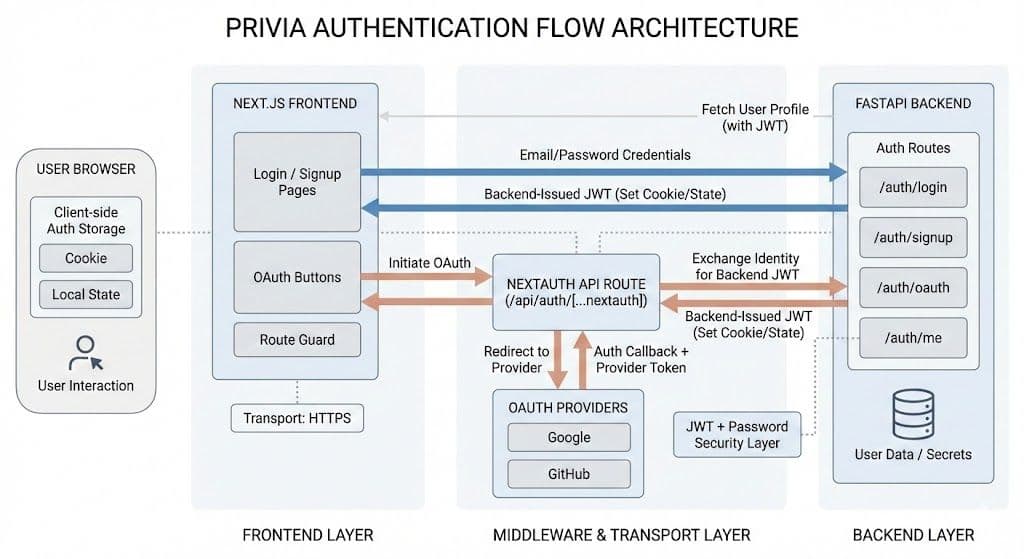

#Step 3 — Authentication: JWT + OAuth Bridge

I implemented two authentication paths:

- Local auth (email/password → backend-issued JWT)

- OAuth via NextAuth → callback → backend JWT exchange

It sounds straightforward until you need one consistent identity model across:

- browser fetch calls

- middleware route protection

- profile hydration

- WebSocket connections

At one point, users appeared “logged in” in the UI but were not valid across all transports.

The fix was disciplined token flow and explicit backend verification across HTTP, SSE, and WebSocket boundaries.

Auth is not one feature — it is a cross-layer architecture concern.

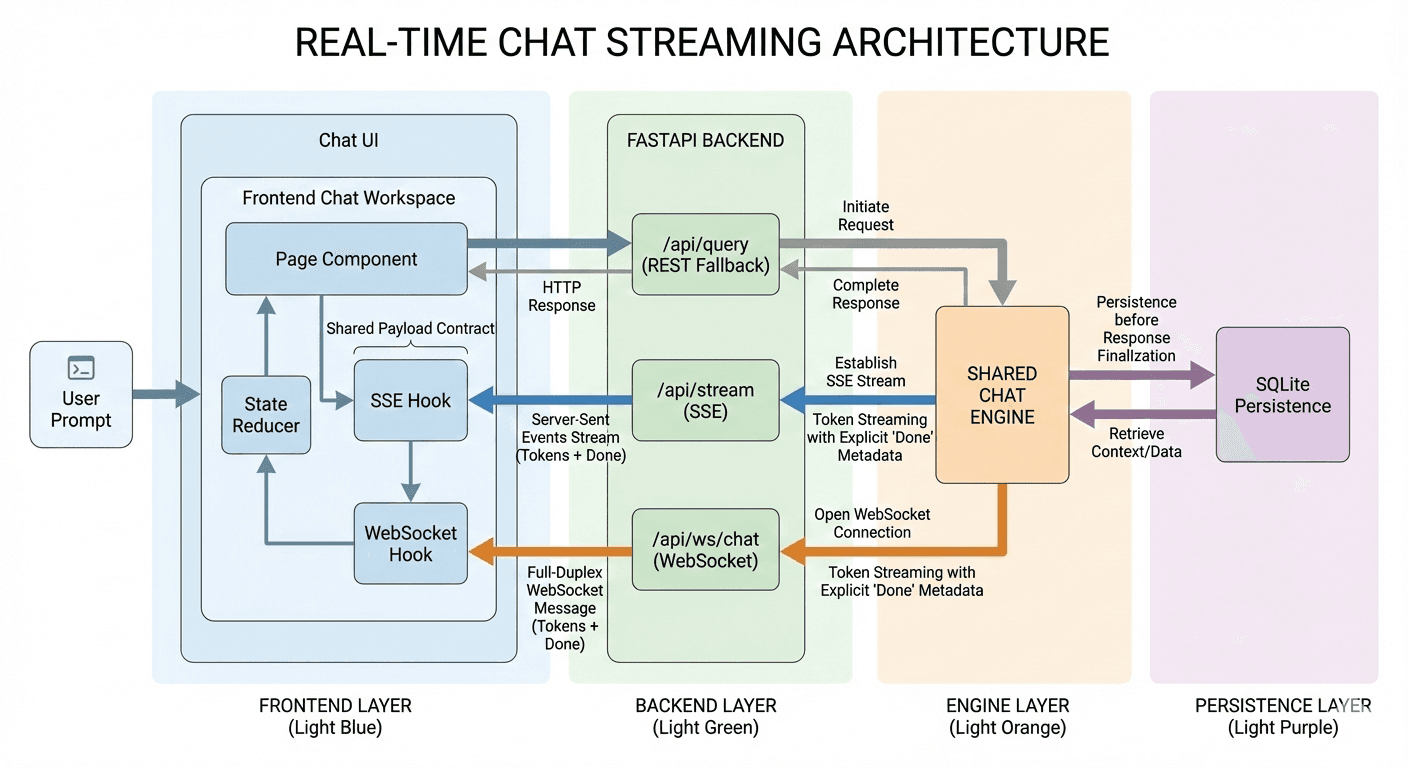

#Step 4 — Streaming Chat: SSE and WebSocket

I implemented dual transport:

- SSE for reliability

- WebSocket for low-latency live streaming

This is where architectural complexity showed up:

- payload parity across transports

- stop-generation control behavior

- done-event metadata and message finalization

- fallback behavior when WebSocket is unavailable

A key bug came from a mismatch around conversation_id.

The UI looked correct, but backend context continuity was broken.

Fixing that made streaming behavior predictable and data-safe.

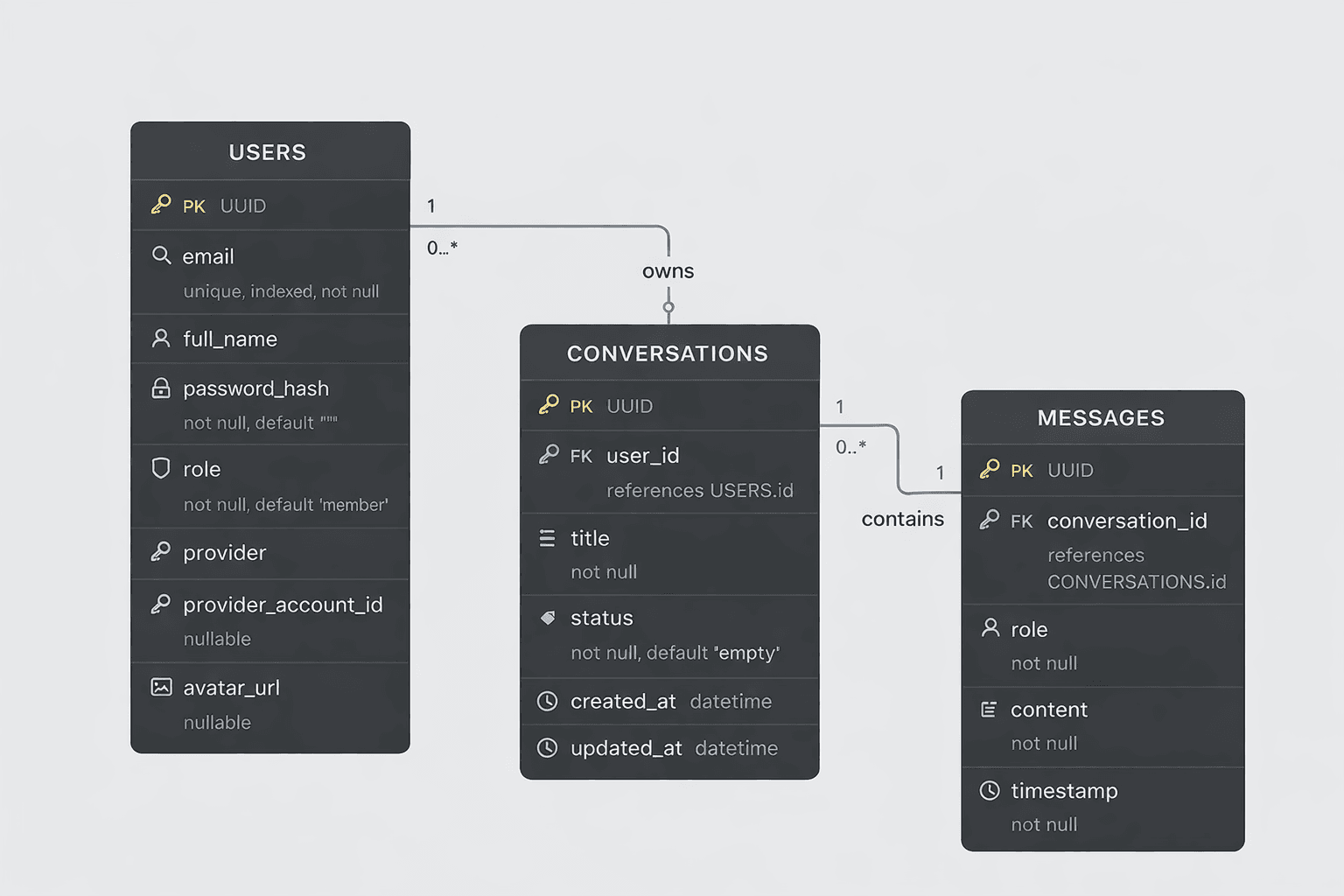

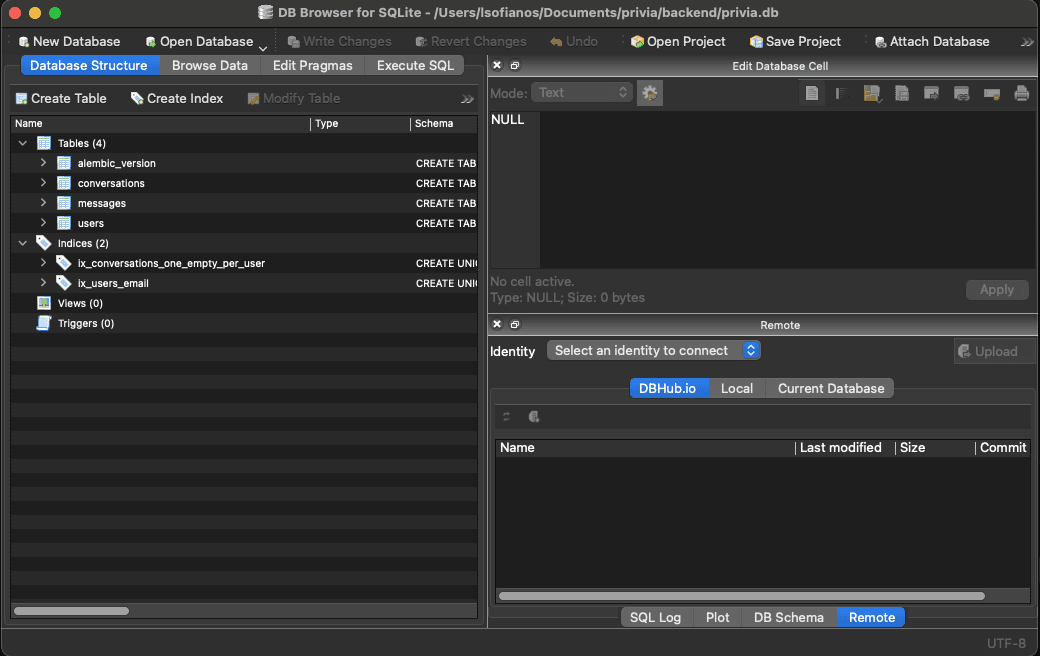

#Step 5 — Persistence: Conversations and Messages

I used SQLite + SQLAlchemy + Alembic with:

usersconversationsmessages

Design details mattered:

- idempotent conversation creation

- status lifecycle (

empty→active) - ownership checks on all conversation operations

- delete cascade on messages

- migration-driven schema evolution

This was not “just CRUD.”

It was application state integrity.

#Constraints & Data Integrity

Beyond the basic table structure, Privia relies on a small set of explicit database constraints to enforce core product invariants.

Unique user identities

Email addresses are enforced as unique via a dedicated index, ensuring a single canonical account per email.

Cascading message lifecycle

Messages are strictly scoped to their parent conversation and removed automatically when a conversation is deleted.

At most one empty conversation per user

A partial unique index enforces that each user can have at most one empty conversation at a time — a subtle but important invariant.

#A Critical Lesson — “Connected” Doesn’t Mean “Consistent”

At one point, everything looked correct:

- messages rendered

- stream tokens arrived

- sidebar updated

But backend truth diverged from UI behavior:

- deletes were local only

- conversation hydration was incomplete

- completion metadata was ignored in some flows

The issue was not rendering.

It was:

- contract mismatch between frontend and backend

- incomplete synchronization logic

- missing lifecycle handling after streaming completion

This taught me a core product lesson:

A chat UI can look healthy while your data model is drifting underneath.

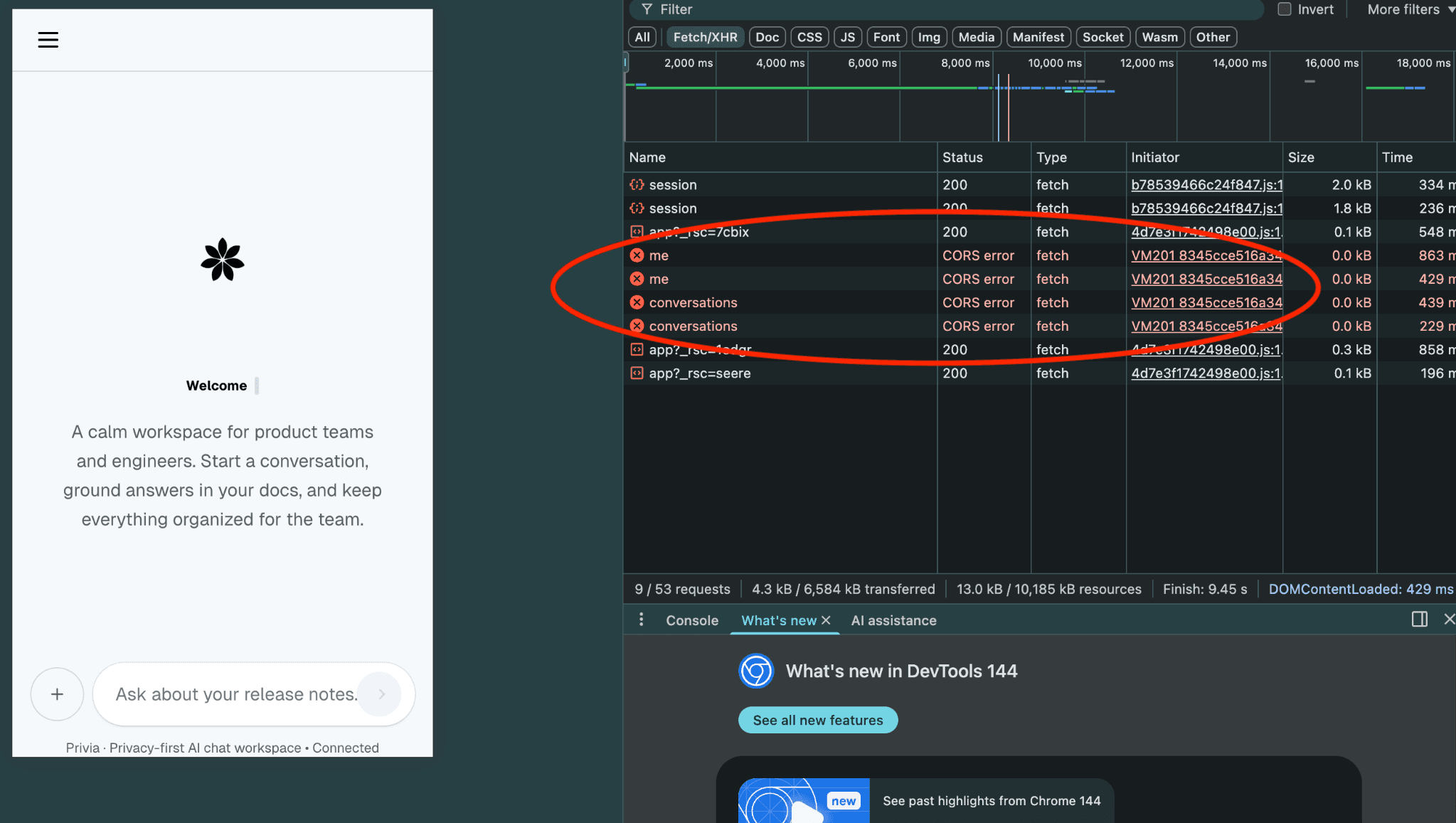

#Step 6 — Production Wiring: CORS, Env, and Boundary Contracts

Local development hides deployment realities.

Once a deployed frontend talks to a deployed backend, strict boundaries appear immediately.

I had to make production-safe decisions around:

- CORS origin handling

- environment-driven backend URLs

- WebSocket URL derivation (

ws://vswss://) - auth token handling across HTTP and WS

- deterministic startup behavior

A backend that works on localhost can still fail instantly in production due to origin and transport configuration.

#Step 7 — Deployment Readiness and CI Discipline

Privia is containerized and deployment-ready:

- backend Docker image

- startup migration execution

- non-root runtime

- explicit writable storage path for SQLite

- frontend environment-based API/WS targeting

I also focused on testability:

- backend route tests

- auth tests

- WebSocket auth and persistence tests

- type-safe frontend checks

No SSH-first workflows.

No hand-edited production behavior.

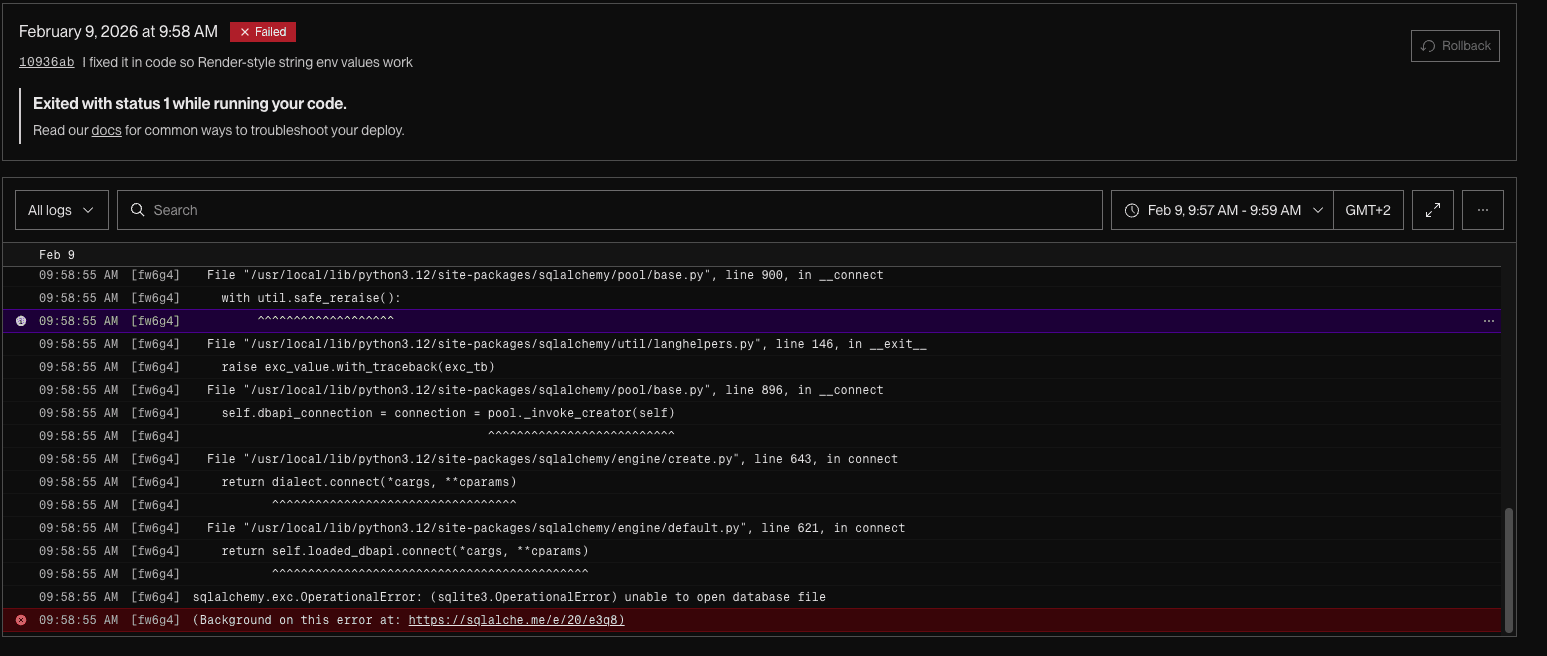

#Step 7.5 — Deployment Reality Check (What Actually Broke)

Once the frontend and backend were deployed independently, a new class of issues surfaced — not bugs in business logic, but boundary failures:

- OAuth redirects working locally but failing in production

- backend endpoints reachable, yet blocked by the browser

- auth succeeding server-side but silently failing in the UI

- “connected” states masking underlying CORS and origin mismatches

None of these were visible locally.

They only appeared when:

- the frontend ran on Vercel

- the backend ran on a separate PaaS

- cookies, redirects, and WebSockets crossed real origins

#CORS Is Not a Checkbox

Even though the backend responded to health checks, browser requests failed due to origin mismatches and blocked preflight requests.

A backend can be reachable and still be functionally unusable from the browser.

#Persistence on PaaS Is Not “Just SQLite”

SQLite worked locally and failed instantly in production until:

- a writable volume was configured

- container permissions were fixed

- migrations ran deterministically at startup

Lesson:

Local success says almost nothing about production readiness.

Production exposes architectural truth faster than any test suite.

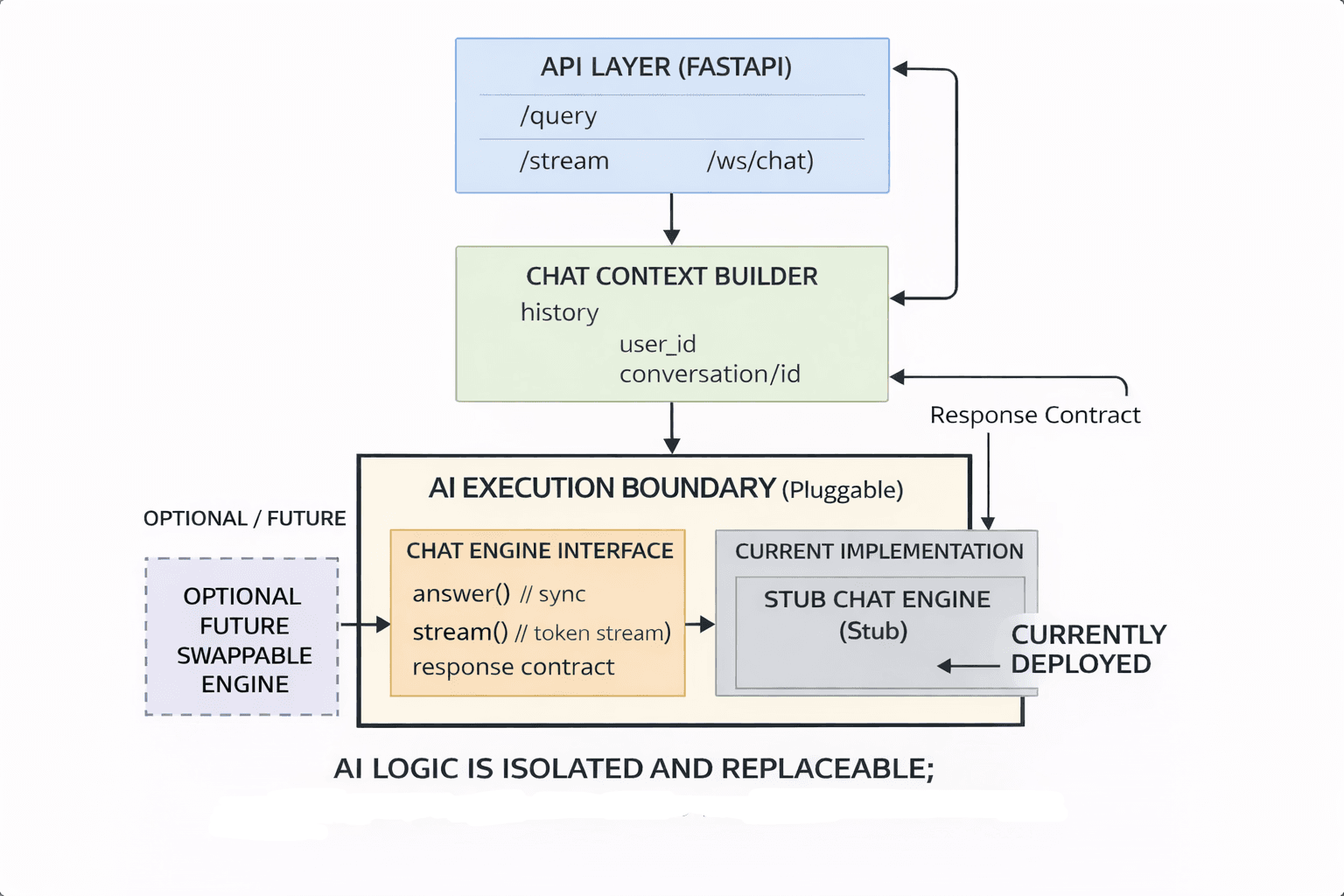

#Step 8 — AI-Ready by Design (RAG-Compatible Architecture)

Privia has a strict ChatEngine boundary:

- API layer does not bind directly to model providers

- engine interface supports sync and streaming modes

- structured response contracts include metadata for sources and mode

- context objects carry conversation history and parameters

The current deployment intentionally uses a stub engine.

This is deliberate:

- architecture first

- contracts first

- transport and persistence correctness first

Inference is the most volatile part of an AI system.

Shipping it without evaluation, cost controls, and observability creates false confidence rather than value.

With this structure, real inference can be plugged in without rewriting the product shell.

#What This Project Taught Me

- “AI product” work is mostly systems engineering before model quality

- frontend polish cannot compensate for weak backend contracts

- streaming UX demands strict transport and data agreement

- auth is not a feature — it is architecture

- local success says almost nothing about production readiness

- shipping clear boundaries beats shipping fragile intelligence

Most importantly:

Production product engineering is a discipline.

#Why I Stopped Here

This project could keep growing:

- full RAG inference activation

- retrieval evaluation and monitoring

- background document pipelines

- richer workspace controls and governance

But shipping matters.

The real value is not another feature.

It is the end-to-end product architecture, implemented, testable, and explainable.

That is what I want engineers — and the teams hiring them — to see.

#Who This Is For

- Backend engineers moving into product architecture

- Frontend engineers learning cross-service systems

- Developers building real AI products beyond demo chat

If this saves you a few days of confusion, it did its job.

Privia is not where intelligence is demonstrated — it is where intelligence is meant to live.