From Event-Driven Prototype to Cloud-Ready Streaming Platform

Most Kafka demos stop at:

- producer publishes\

- consumer reads\

- done

That's not how real systems behave.

Real systems receive unreliable webhooks.

They face retries, duplicates, malformed payloads, and partial

failures.

They need observability.

They need CI.

They need infrastructure you can explain.

So I built EventFlow --- not as a toy demo, but as a reproducible streaming backbone.

🔗 Source code:

https://github.com/sofman65/EventFlow

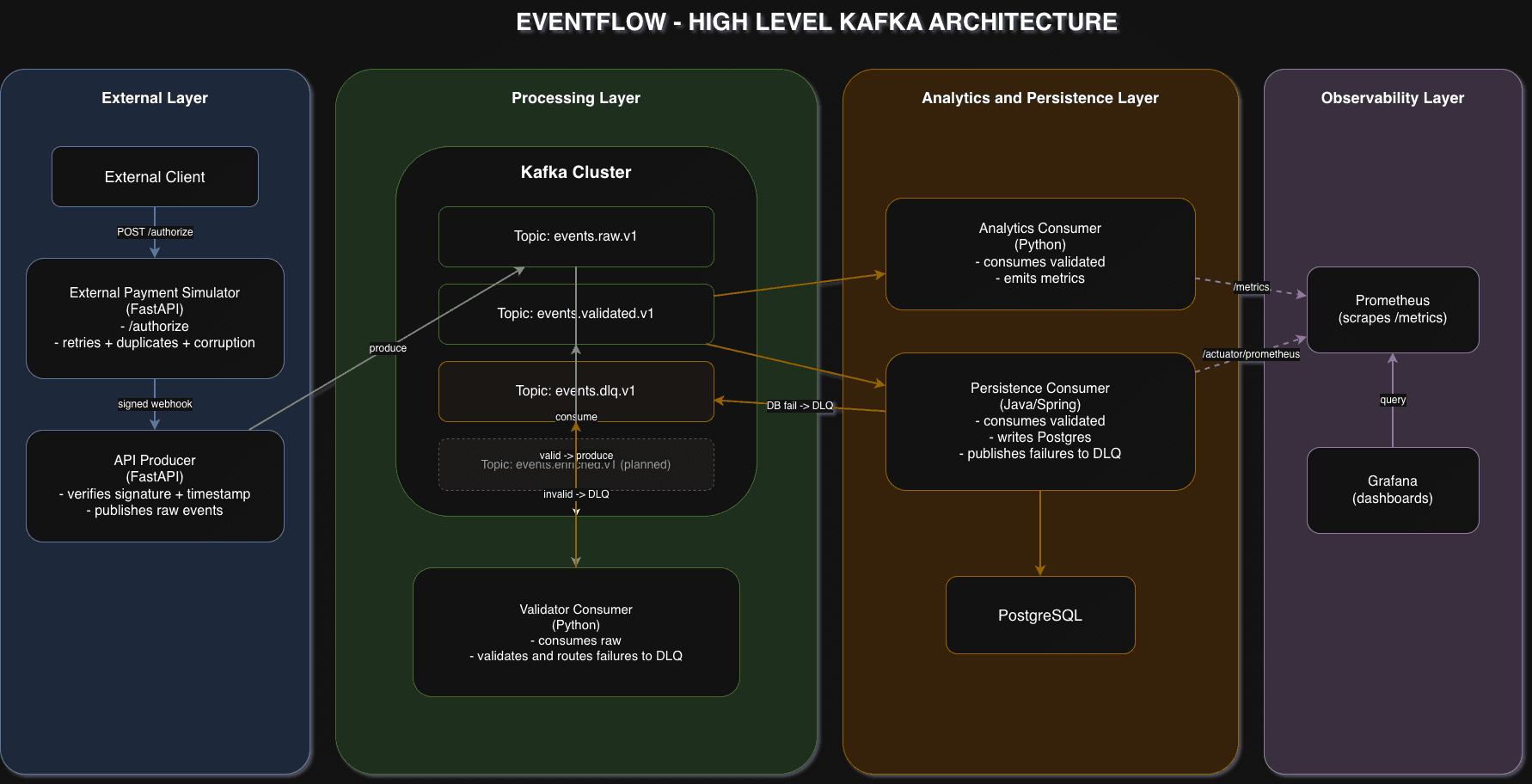

High-Level Architecture

EventFlow models a payment authorization pipeline:

- External client calls

/authorize - External payment simulator signs and delivers webhook

- API Producer verifies signature and publishes immutable event

- Kafka routes events through processing stages

- Consumers operate independently

- Failures go to a Dead Letter Queue (DLQ)

No synchronous service calls.

No shared databases.

No hidden coupling.

Everything moves through Kafka.

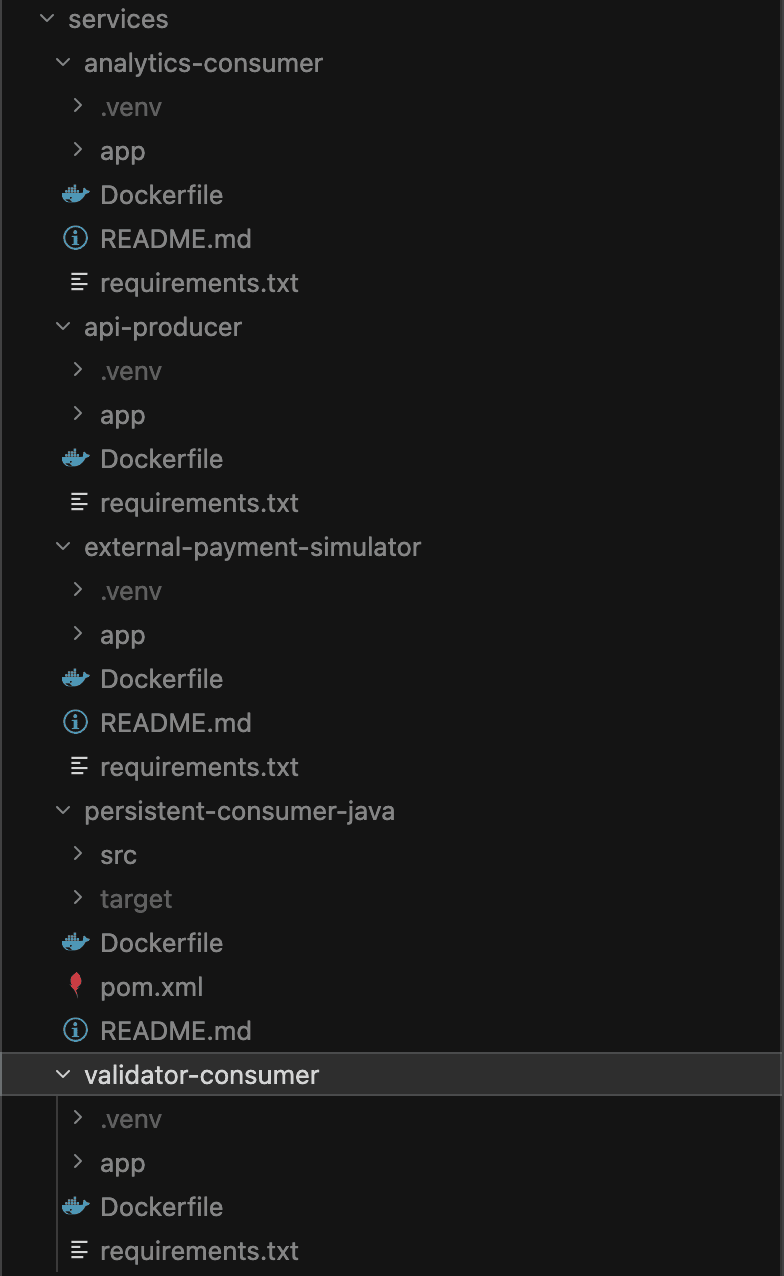

The Services

The system consists of:

- external-payment-simulator (FastAPI)

- retries\

- duplicate injection\

- corruption injection

- api-producer (FastAPI)

- verifies webhook signature\

- validates timestamp window\

- publishes

events.raw.v1

- validator-consumer (Python)

- enforces schema + business rules\

- routes invalid events to

events.dlq.v1

- analytics-consumer (Python)

- emits processing metrics

- persistence-consumer (Java / Spring Boot)

- writes validated events to PostgreSQL\

- routes DB failures to DLQ

Each service owns exactly one responsibility.

Kafka Topic Flow

The system revolves around explicit topic stages:

events.raw.v1events.validated.v1events.dlq.v1events.enriched.v1(planned)

Flow:

raw → validated → analytics + persistence

↘ DLQ (failures)

Events are immutable.

Failures are data.

New consumers can attach without touching producers.

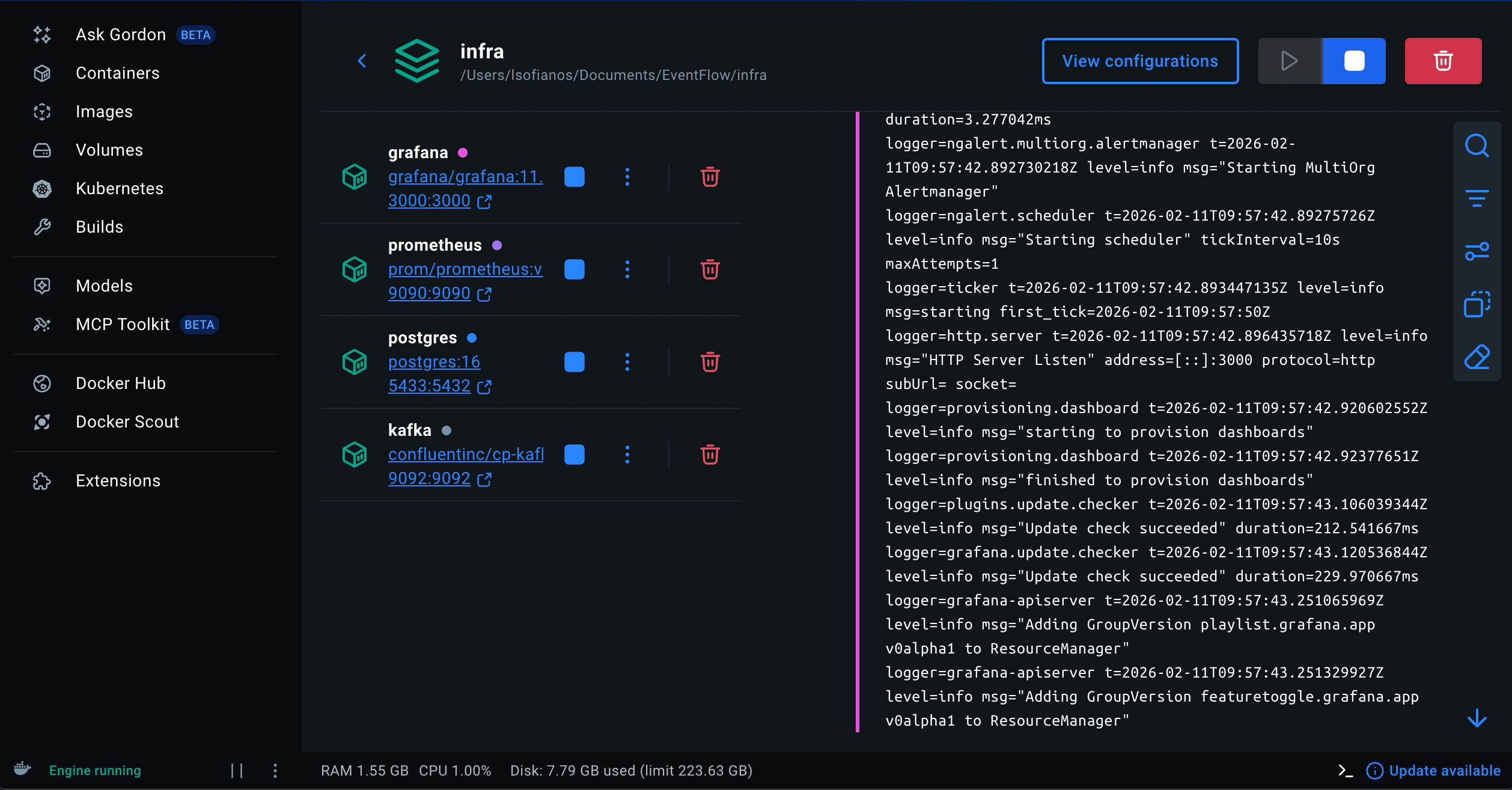

Local Infrastructure (Docker Compose)

Local stack includes:

- Kafka (KRaft mode)

- PostgreSQL

- Prometheus

- Grafana

Everything starts with:

cd infra

docker compose up -d

Reproducibility matters.

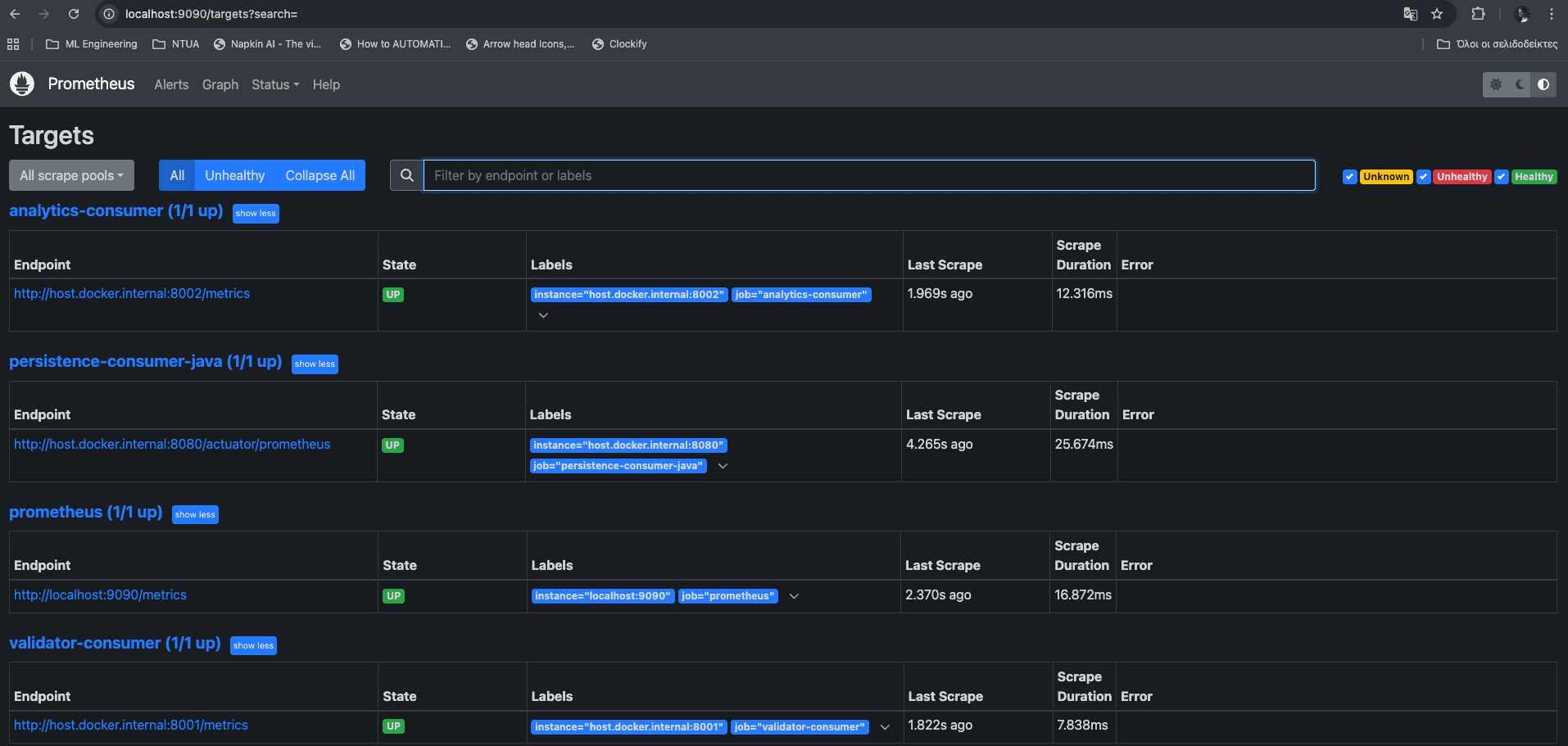

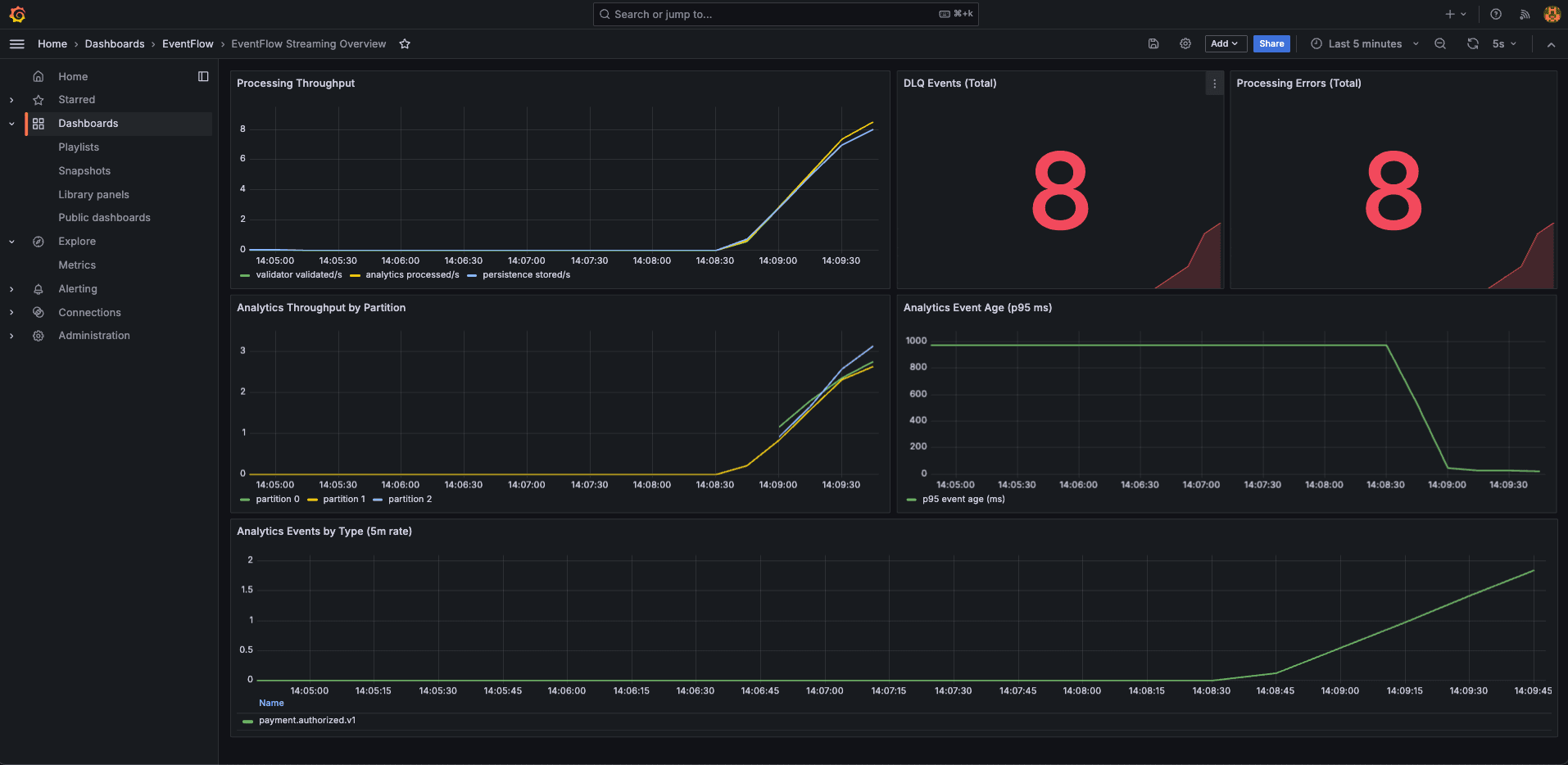

Observability (Prometheus + Grafana)

Prometheus scrapes:

- validator metrics

- analytics metrics

- persistence

/actuator/prometheus - simulator metrics

You can see:

- processing throughput

- error rates

- DLQ counts

- event age

Grafana shows real-time streaming behavior.

A key lesson:

A system can be healthy and silent.

Without traffic shape, dashboards mean nothing.

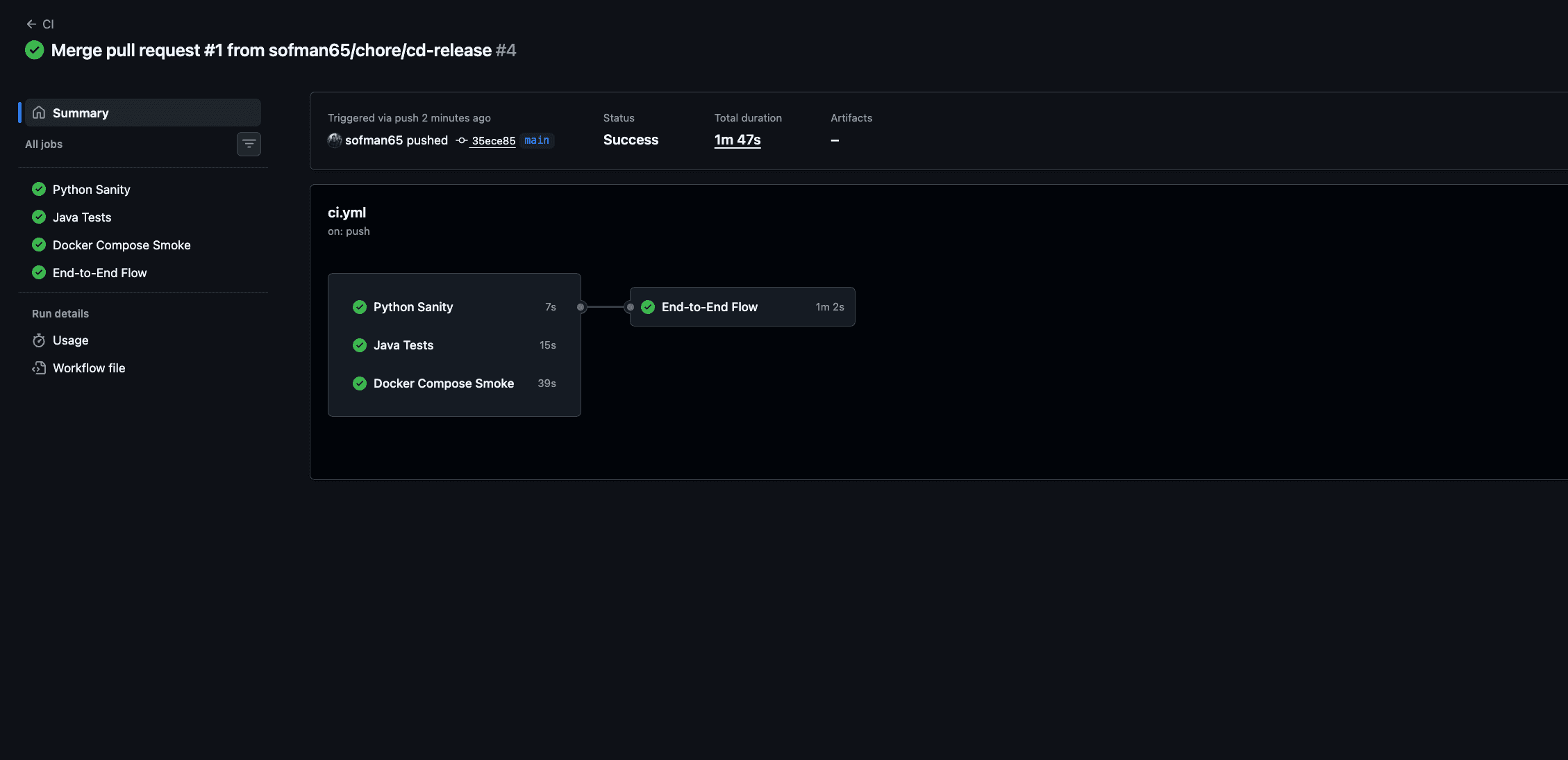

CI Pipeline

CI runs:

- Python compile checks\

- Java Maven tests\

- Docker Compose smoke validation\

- End-to-end pipeline test

Unit tests don't prove distributed systems work.

End-to-end validation does.

Terraform Deployment Readiness

EventFlow is deployment-ready as a reproducible demo/interview cloud baseline.

Already in place:

- Terraform stack for VPC, ECS Fargate, MSK, RDS, ALB, ECR, CloudWatch

- Release CD workflow on tags (

v*,release-*) - Image build and push automation

- End-to-end CI validation before merge

Intentionally not production-hardened yet:

- Kafka auth/TLS hardening

- private-only networking for all tasks

- stricter IAM boundaries

- backup/restore + HA policies

This is intentional: the project optimizes for architectural clarity and reproducibility first.

Cost Breakdown (AWS us-east-1, 24/7)

Estimated with current Terraform defaults (as of February 11, 2026):

| Component | Monthly (USD) |

|---|---|

| ECS Fargate (compute + memory) | 63.07 |

MSK (2 x kafka.t3.small + 200 GB storage) | 86.58 |

RDS (db.t4g.micro + 20 GB gp3) | 13.98 |

| ALB (fixed + low LCU usage) | 17.01 to 22.27 |

| Public IPv4 charges | 25.55 |

| Estimated base total | 206 to 212 |

| ECR / logs / transfer overhead | +5 to +30 |

Practical expected range: ~210 to ~240 USD/month.

Key takeaway: MSK is the dominant cost driver.

Context for larger Kafka sizing:

- 3 x

kafka.t3.small-> ~250 USD/month total - 3 x

kafka.m5.large-> ~610 USD/month total - 3 x

kafka.m5.xlarge-> ~1,070 USD/month total

What This Project Demonstrates

- Clear event contracts

- Isolation via consumer groups

- DLQ-driven failure modeling

- Observability-first mindset

- CI for distributed flows

- Infrastructure as Code

- Cost awareness